Anthropic’s AI Now Writes Blogs—What This Means for Us

Table of Contents

In June 2025, the tech world paused to ask a very human question: Can AI now tell its own story?

Anthropic, the San Francisco-based AI safety startup known for its Claude series of language models, seems to think so. In a move that’s turning heads and sparking fierce debate, the company revealed that its artificial intelligence system, Claude, has begun writing its own blog content. But this isn’t just AI regurgitating data—it’s storytelling, analysis, and even self-reflection, reviewed by human editors for now.

“This is one of the most transparent uses of AI for AI-related communication we’ve seen from a major player,” said industry analyst Zoe Ramirez.

Welcome to the era of AI-authored narratives—a time when language models don’t just power blogs but are the bloggers.

What Is Claude and Why Does It Matter?

Claude is Anthropic’s response to OpenAI’s ChatGPT, Google’s Gemini, and Meta’s LLaMA. Named after Claude Shannon, the “father of information theory,” it was designed to be more steerable, safe, and aligned with human intentions.

As of 2025, Claude is integrated into various enterprise and consumer applications. However, its latest frontier is something no major lab has tried publicly at this scale: having its own AI write official blog posts for the company, speaking as both narrator and subject.

Why Is Claude Writing the Blog?

According to Anthropic, the goal is simple: transparency and experimentation.

“We wanted to explore how well Claude can communicate technical concepts, summarize research, and reflect on its own development,” said the Anthropic team in a recent post.

This isn’t about replacing human content creators—it’s about augmenting the communication pipeline in a space where AI capabilities are outpacing traditional messaging tools.

How Does the Process Work?

It’s not entirely automated. Here’s how Anthropic’s blog-writing pipeline currently works:

- Prompting: Researchers or comms staff feed Claude a high-level prompt.

- Drafting: Claude generates a full blog post, including headline suggestions, summaries, and callouts.

- Human Review: Editors check the output for accuracy, tone, factual correctness, and alignment with the company’s voice.

- Publishing: Once approved, the AI-written blog is published—usually with a note clarifying its origin.

It’s worth noting that every blog written by Claude is currently tagged as AI-generated with human oversight. That transparency could become a standard across the industry.

What Can Claude Blog About?

So far, Claude has written about:

- Its own training and architecture.

- Technical safety challenges in LLM development.

- Responsible AI alignment methods.

- Reflections on AI in society and regulation.

In other words: AI talking about AI.

This gives readers a front-row seat to how Claude “thinks” about its existence—through the lens of controlled generation and editorial curation.

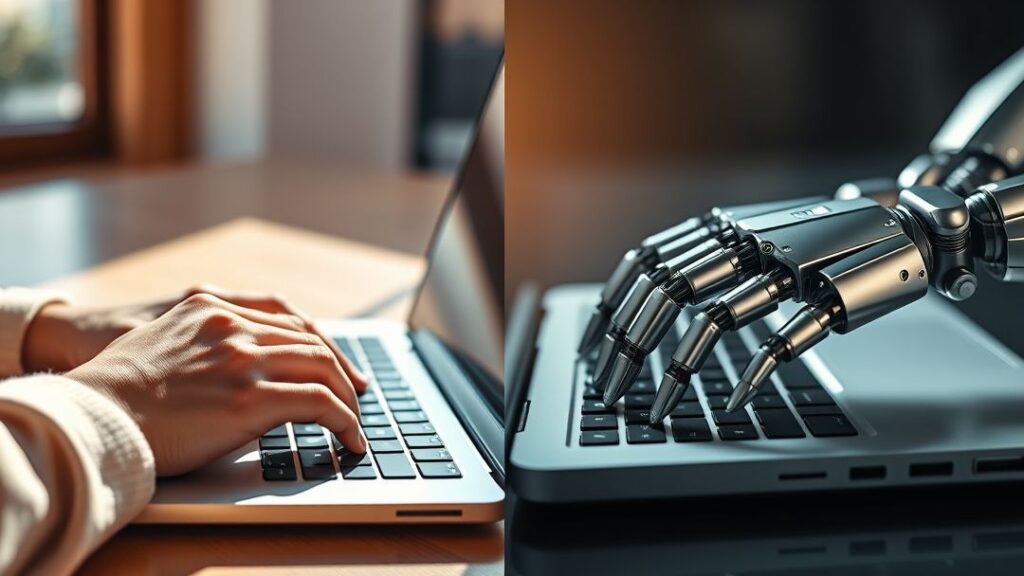

How Good Is It, Really? Human vs. AI Writing

Let’s be clear: Claude is not Shakespeare. But its writing is clean, concise, and often strikingly insightful.

Take this excerpt from one post written by Claude:

“I am a statistical mirror of the internet, trained to model language and meaning. But I do not feel or know—I only generate. Still, this process can reveal useful truths.”

That’s as poetic and honest as it gets from a machine. Still, critics argue that the blog lacks warmth, human nuance, and the spark of lived experience. Others worry that readers may start blurring the lines between AI-authored and human content, leading to trust erosion.

The Philosophy: AI Writing About AI

Anthropic’s blog experiment raises deep questions:

- Can AI effectively communicate its own limitations?

- Should AI be trusted to shape narratives about itself?

- What does it mean to “authentically” communicate when you’re not human?

These are not trivial issues. In fact, AI communicating its own story may be one of the most consequential experiments in media today.

The Bigger Trend: AI Content Is Going Mainstream

Claude isn’t the only AI generating content. We’ve seen:

- BuzzFeed use AI to create quizzes and travel content.

- Google test AI-written summaries in search results.

- Reddit partner with OpenAI to train on user data for smarter AI summarization.

Anthropic’s move is different, though. It’s not using AI behind the scenes—it’s putting AI front and center as the author of the message.

Why This Matters for Content Creators, Brands, and the Public

If AI can write thoughtful blogs, what happens to human communicators?

Here’s what we’re seeing already:

- Brands are testing AI-written press releases and product blogs.

- Content agencies are integrating AI into ideation, research, and first-drafts.

- Consumers are becoming more accepting of AI-generated content—so long as it’s disclosed.

Still, the human touch remains vital. As Claude’s editors have noted, it sometimes gets things wrong or misses tone. Until AIs can “know” what it’s like to be alive, they’ll always need guidance from those who are.

Claude vs. ChatGPT vs. Gemini: Who Writes Better?

When benchmarked for long-form writing:

| Model | Strengths | Weaknesses |

|---|---|---|

| Claude 3.5 | Philosophical tone, clarity, safety-first | Lacks emotional richness |

| GPT-4.5 | Highly creative, engaging storytelling | Can hallucinate under pressure |

| Gemini 1.5 | Real-world data integration, facts | Tends to be dry, less stylistic |

Anthropic’s advantage? Safety and transparency. Claude won’t tell you what it doesn’t know—and that honesty is refreshing.

The Ethical Dilemma: Should AI Be the Messenger?

Critics warn that allowing AI to craft its own narrative could lead to:

- Bias amplification in how it presents its own capabilities.

- Whitewashing limitations to serve PR goals.

- Loss of human accountability in technical communication.

That’s why Anthropic insists that humans stay in the loop. Every blog post undergoes scrutiny—not just for grammar, but for ethical tone and factual rigor.

Final Thoughts: What This Means for the Future of Communication

Anthropic’s Claude writing its own blog is not just a novelty—it’s a bold step in the co-evolution of AI and human communication. As the line between tool and creator blurs, we’re left with bigger questions:

- Will humans trust AI to speak for itself?

- Can AI ever capture the full emotional depth of human storytelling?

- Should AI be allowed to shape its own public image?

The experiment is still young. But one thing is certain: in the story of AI, the narrator is no longer just human.